Der erste

Data Product Builder am Markt

Treffen Sie bessere Entscheidungen.

Erzielen Sie messbare Ergebnisse.

An Demo teilnehmen Verwandeln Sie Datensilos in zuverlässige und wertvolle Datenprodukte – mit One Data. Der AI-gestützte Data Product Builder ist eine Komplettlösung, mit der Sie Datenprodukte 80 % schneller erstellen, verwalten und teilen können.

Erwähnt von

Schöpfen Sie Ihre Datensilos aus.

Sichern Sie sich den entscheidenden Wettbewerbsvorteil.

One Data wurde speziell so entwickelt, dass Sie Datenprodukte schnell erstellen, verwalten und gemeinsam nutzen können – von Datenexperten bis hin zu Geschäftsanwendern in Unternehmen wie thyssenkrupp, SCHOTT und ebm-papst.

Diese Unternehmen vertrauen auf One Data

Verknüpfen Sie

alle Geschäftsdaten

an einem Ort

Mehr erfahren Die Kernfunktionen

von One Data

Entdecken Sie die AI-gestützten Funktionen des Data Product Builders. Ihre Datenexperten und Business User können damit wertvolle Daten schneller in Datenprodukte verwandeln, die unternehmensweit genutzt werden.

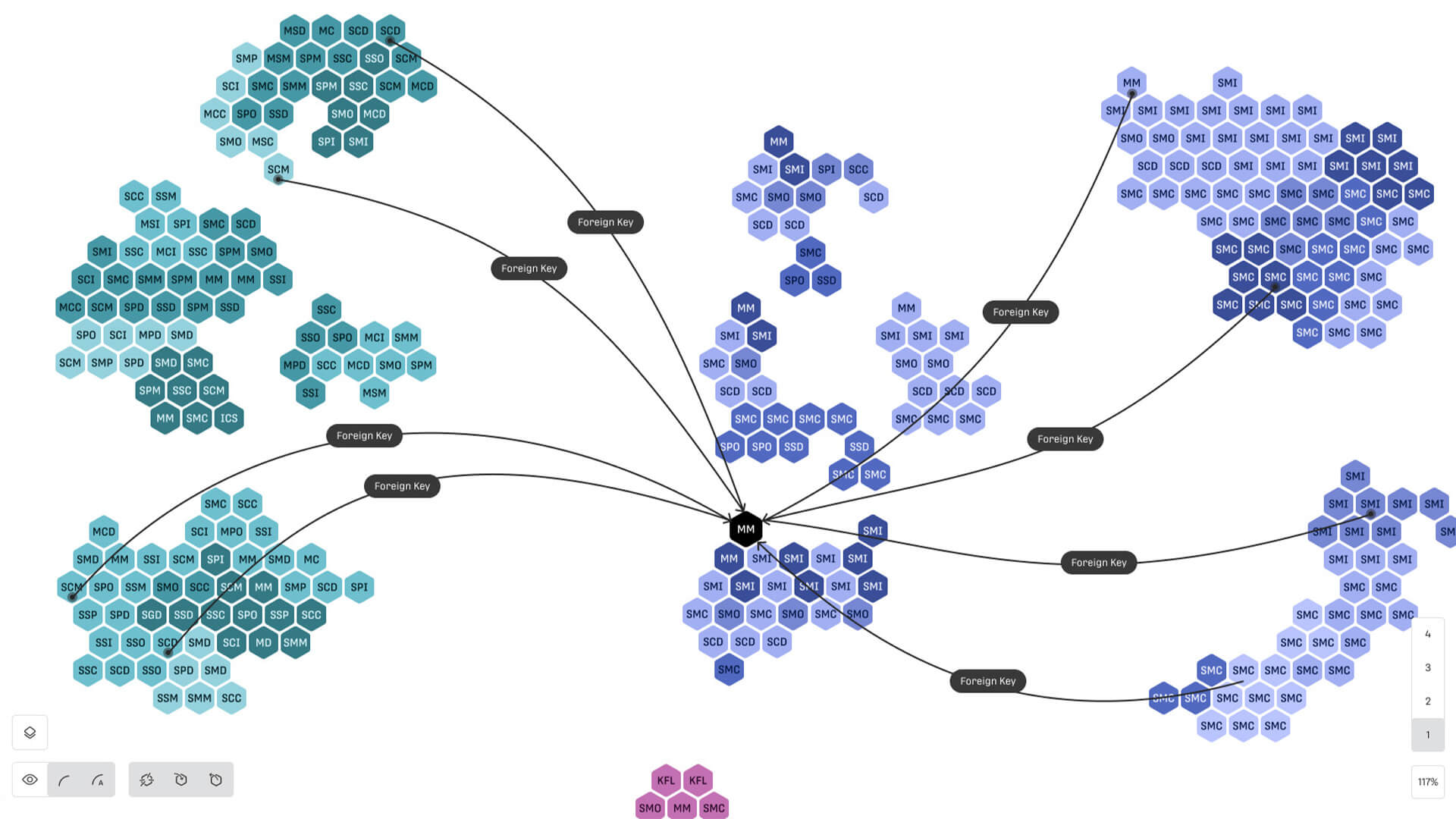

Interaktive Datenlandkarte

Datenbestände visualisieren und finden

Verschaffen Sie sich einen vollständigen Überblick, um verfügbare Datensätze schnell zu finden, zu verstehen und zu prüfen. Verbinden Sie Ihre wichtigsten Daten an einem zentralen Ort und verkürzen Sie die Zeit, um relevante Datenbestände zu finden.

Mehr erfahrenDatenaufbereitung

Daten in Minutenschnelle harmonisieren

Verbinden Sie unzusammenhängende Daten aus verschiedenen Systemen, um umfangreiche Datenprodukte zu erstellen. Die Aufbereitung erfolgt automatisiert mithilfe AI-gestützter Funktionen zur Datenverknüpfung.

Mehr erfahrenDatenqualität

Zuverlässige Entscheidungen garantieren

Richten Sie regelmäßige Qualitätsprüfungen ein, um Datenprodukte über den gesamten Lifecycle hinweg zu pflegen. Ermitteln Sie die Ursachen von Qualitätsproblemen mit automatisierten und benutzerdefinierten Überprüfungen. Sie können ein Frühwarnsystem für die Datenqualität in Ihren Anwendungen einrichten.

Mehr erfahrenMarktplatz für Datenprodukte

Bereichern Sie Ihre Projekte mit Daten

Finden Sie einsatzbereite Datenprodukte auf dem Marktplatz für Datenprodukte, einem zentralen Bereich für datengestützte Zusammenarbeit. Hier können Business User Datenprodukte suchen, abrufen und gemeinsam für neue Erkenntnisse nutzen.

Mehr erfahren

Daten fließen durch alle Abteilungen.

Erfolg erfordert gemeinsame Verantwortlichkeit.

„Daten sind für alle da und sollten im gesamten Unternehmen wertschöpfend genutzt werden. Dank One Data konnten wir intern einen Wandel erzielen, Daten als Produkte zu behandeln. Datenprodukte werden so fachbereichsübergreifend bereitgestellt und nutzbar gemacht.“

Martin Kemmer

Martin Kemmer

Head of Smart Factory & OT 4.0

SCHOTT AG

Unabhängig von der Branche, der Unternehmensgröße oder der Entwicklungsphase ist jedes Unternehmen auf seine einzelnen Abteilungen angewiesen, um erfolgreich arbeiten zu können.

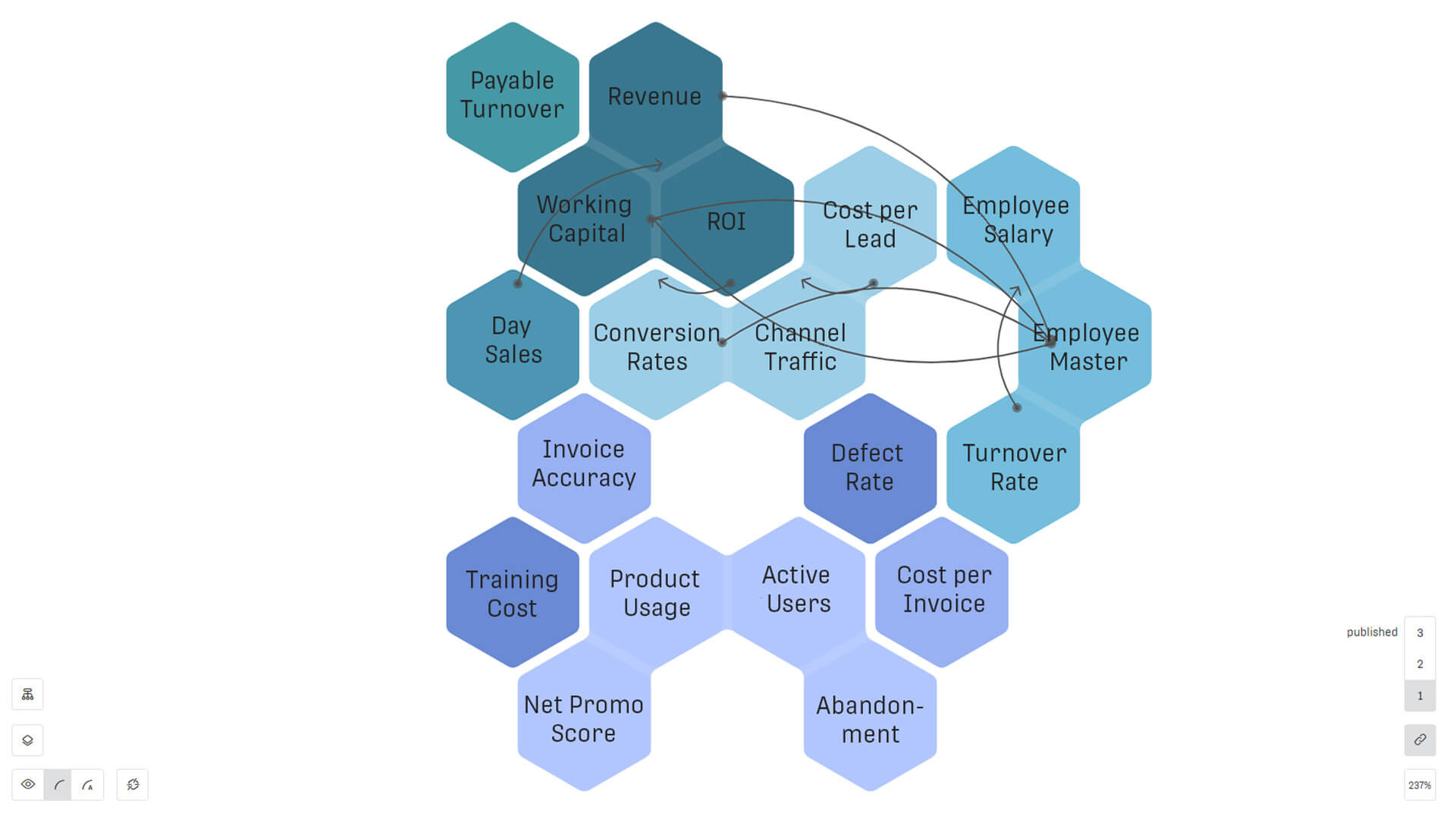

Mit den richtigen Datenprodukten können alle Abteilungen aussagekräftige KPIs festlegen und verfolgen, sich an Marktdynamiken anpassen und Ressourcen optimal verteilen.

Mehr über abteilungspezifische DatenprodukteMit Datenprodukten

die Herausforderungen jeder Branche angehen

Fertigungsindustrie

Hersteller bemühen sich um Effizienz in einem sich ständig ändernden wirtschaftlichen Umfeld. Unternehmen wie thyssenkrupp erstellen Datenprodukte, um Herausforderungen in der Produktion zu bewältigen.

Mehr Aufträge erledigenPharmaindustrie

Pharmaunternehmen bewegen sich in einem komplexen Umfeld und benötigen Datenprodukte, um bahnbrechende Forschung und Entwicklung zu betreiben und ihr geistiges Eigentum zu schützen.

Bessere Daten verschreibenLieferketten

Für Unternehmen, die Lieferketten verwalten, sind Einbrüche und Unterbrechungen allgegenwärtig. Unternehmen wie Markant nutzen Datenprodukte, um Ressourcen effektiv zuzuweisen und mehr Waren und Dienstleistungen rechtzeitig zu liefern.

Kunden zufriedenstellenSchöpfen Sie mehr Wert

aus Ihren Daten

Gemeinsam mit unserem Partnernetzwerk kann One Data Sie noch besser beim Überwinden von Datensilos und der Umsetzung von Data Mesh und Data Fabric unterstützen.

Mehr erfahrenMit Datenprodukten größere Wirkung erzielen

Unsere Welt, der Markt und Bedingungen für Unternehmen ändern sich ständig. Deshalb ist die optimale Nutzung Ihrer Daten genau das, was Sie von Ihrer Konkurrenz unterscheidet.

Wir haben One Data entwickelt, um Sie dabei zu unterstützen. Indem Sie Ihre Daten systemübergreifend miteinander verbinden und teamübergreifend zugänglich machen, können Sie Ihre gesamte Datenlandschaft überblicken und daraus neue Werte schaffen. Es ist Zeit, Ihre Daten intelligent zu nutzen und den Mehrwert von Datenprodukten zu erkennen.

An Demo teilnehmenWeitere Ressourcen

Whitepaper | Die Zukunft datengetriebener Unternehmen

Erfahren Sie, wie Sie die Datenaufbereitung beschleunigen, die Datenqualität verbessern und aus den Daten Erkenntnisse gewinnen.

Kundengeschichte | Vernetzte Daten führen zu kulturellem Wandel

Die SCHOTT AG setzt den Data-Mesh-Ansatz um und ebnet den Weg für Innovationen durch Datenprodukte.

Gartner® Report | Fundierte Basis für unternehmerische Entscheidungen

Zugang zu objektiven und umsetzbaren Gartner®-Insights

Mehr erfahren